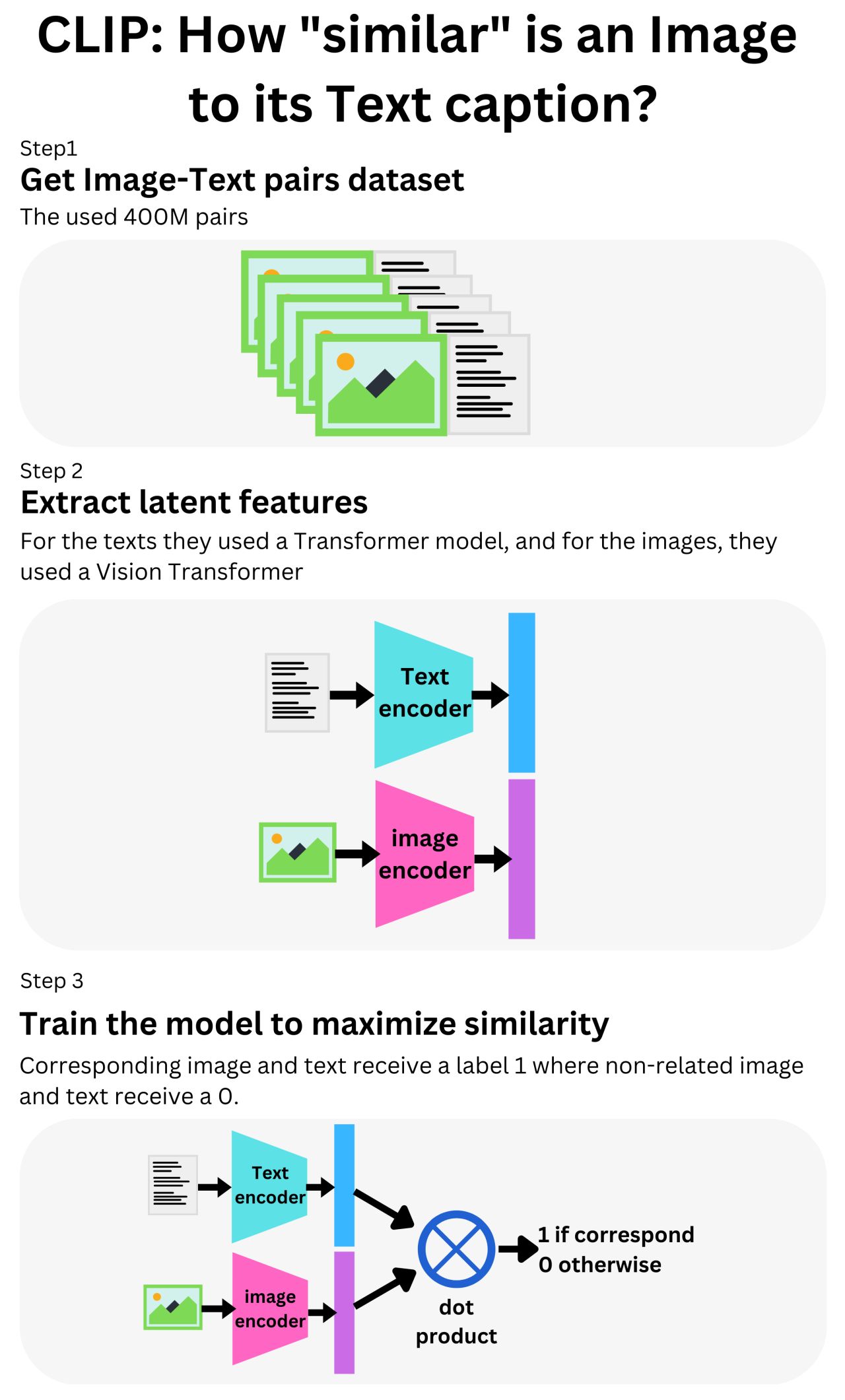

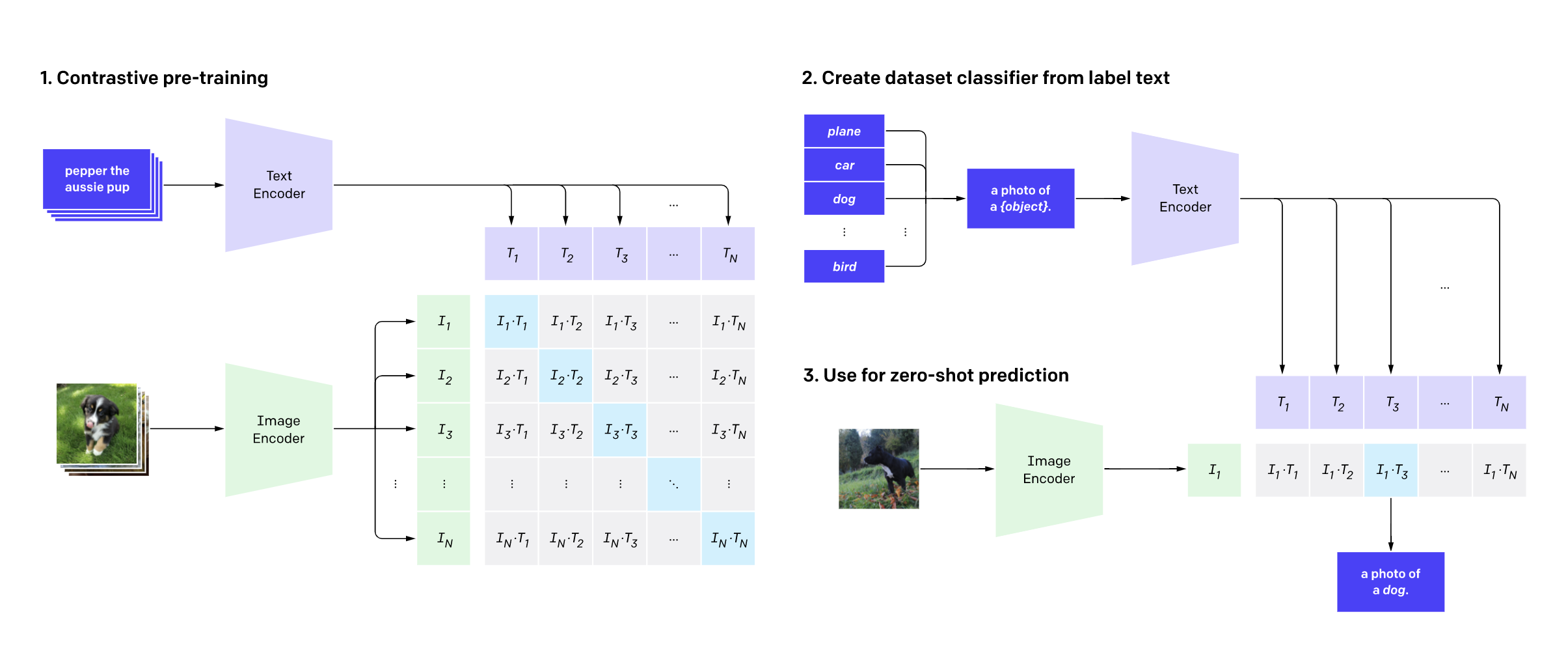

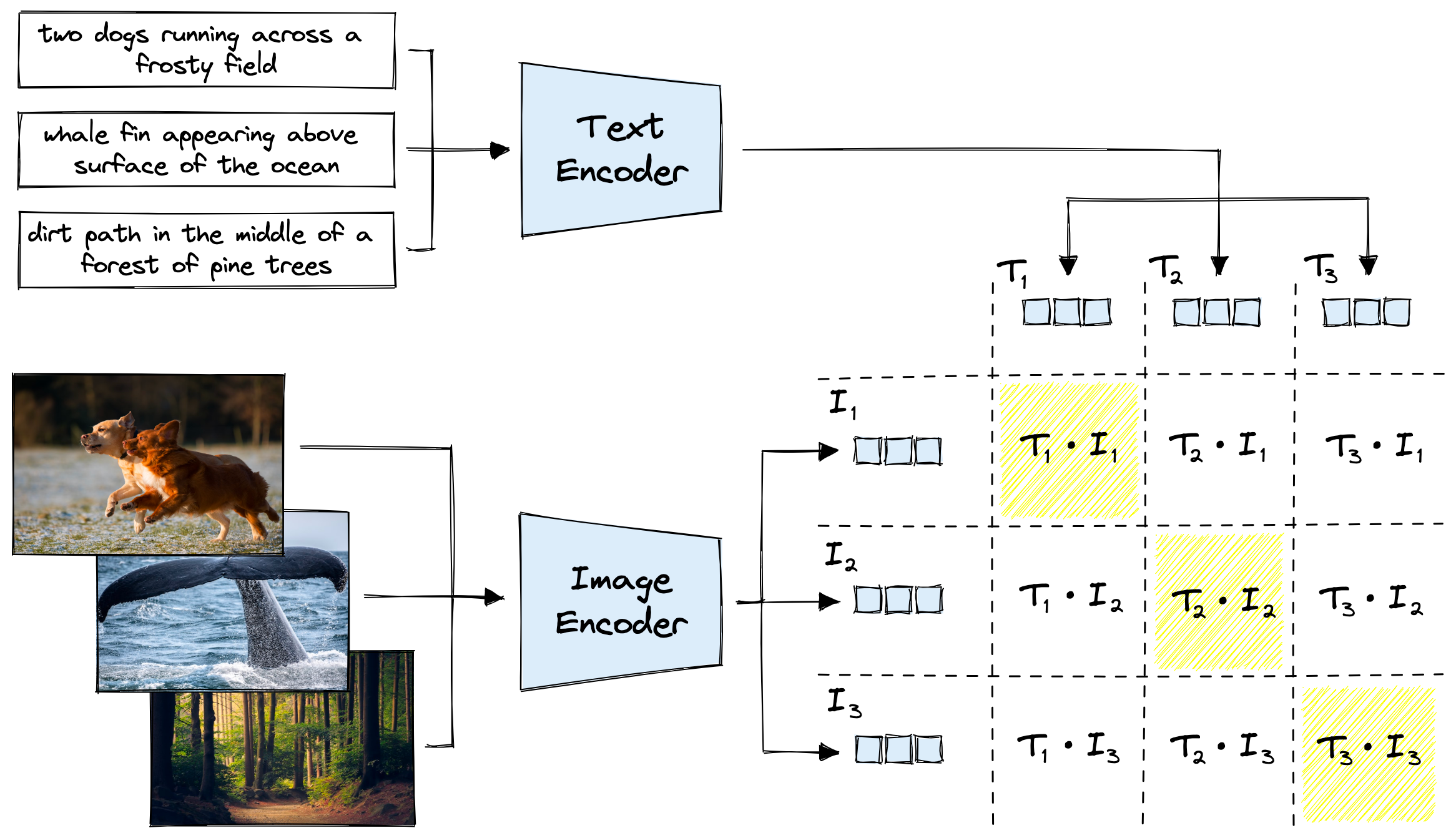

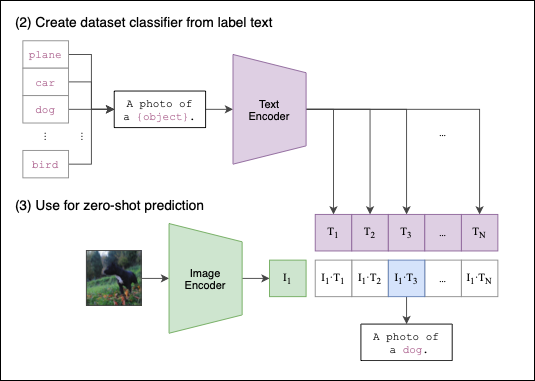

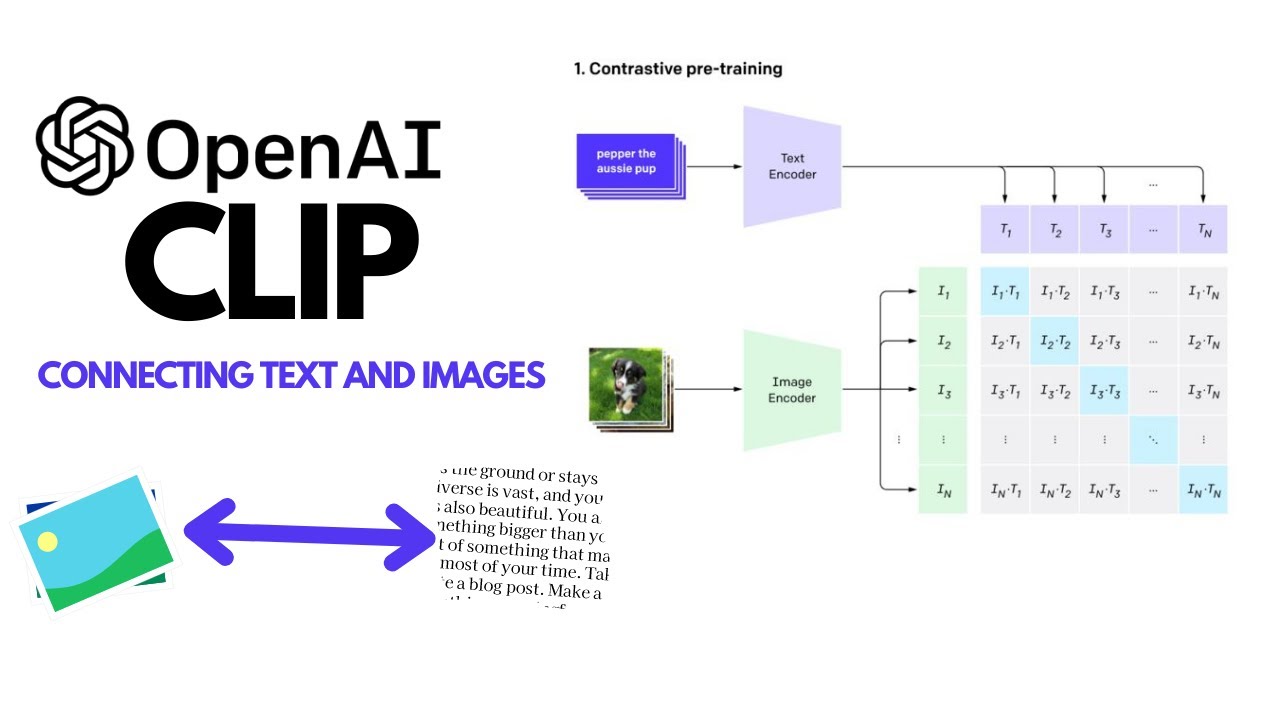

CLIP: The Most Influential AI Model From OpenAI — And How To Use It | by Nikos Kafritsas | Towards Data Science

HYCYYFC Mini Motor Giunto a Doppia Membrana, Giunto Leggero con Montaggio a Clip da 2,13 Pollici for Encoder : Amazon.it: Commercio, Industria e Scienza

OpenAI's CLIP Explained and Implementation | Contrastive Learning | Self-Supervised Learning - YouTube

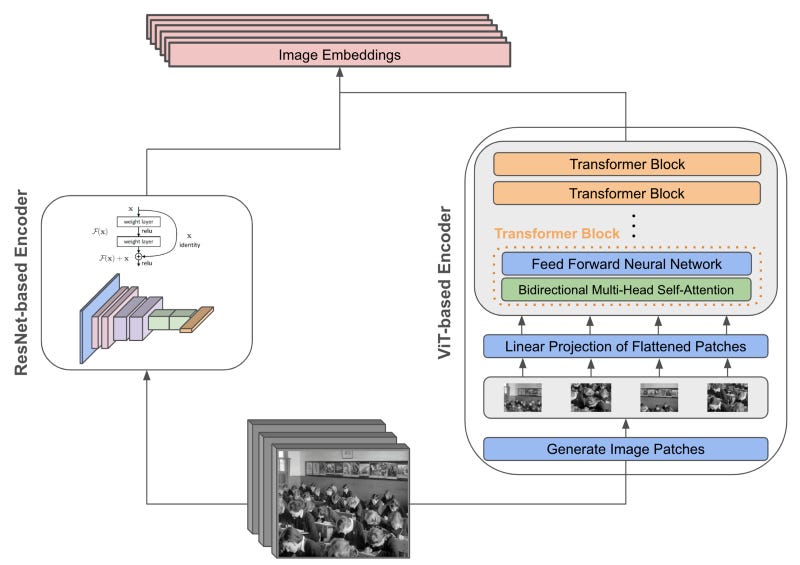

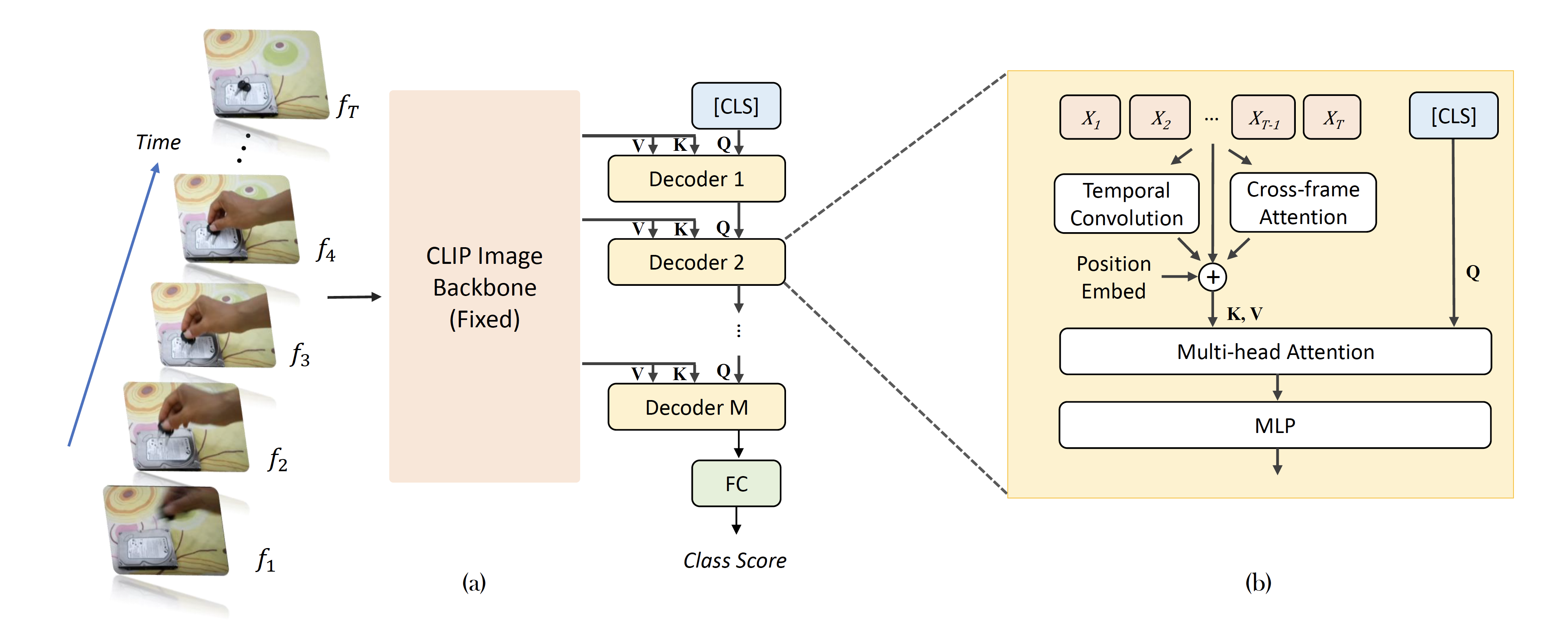

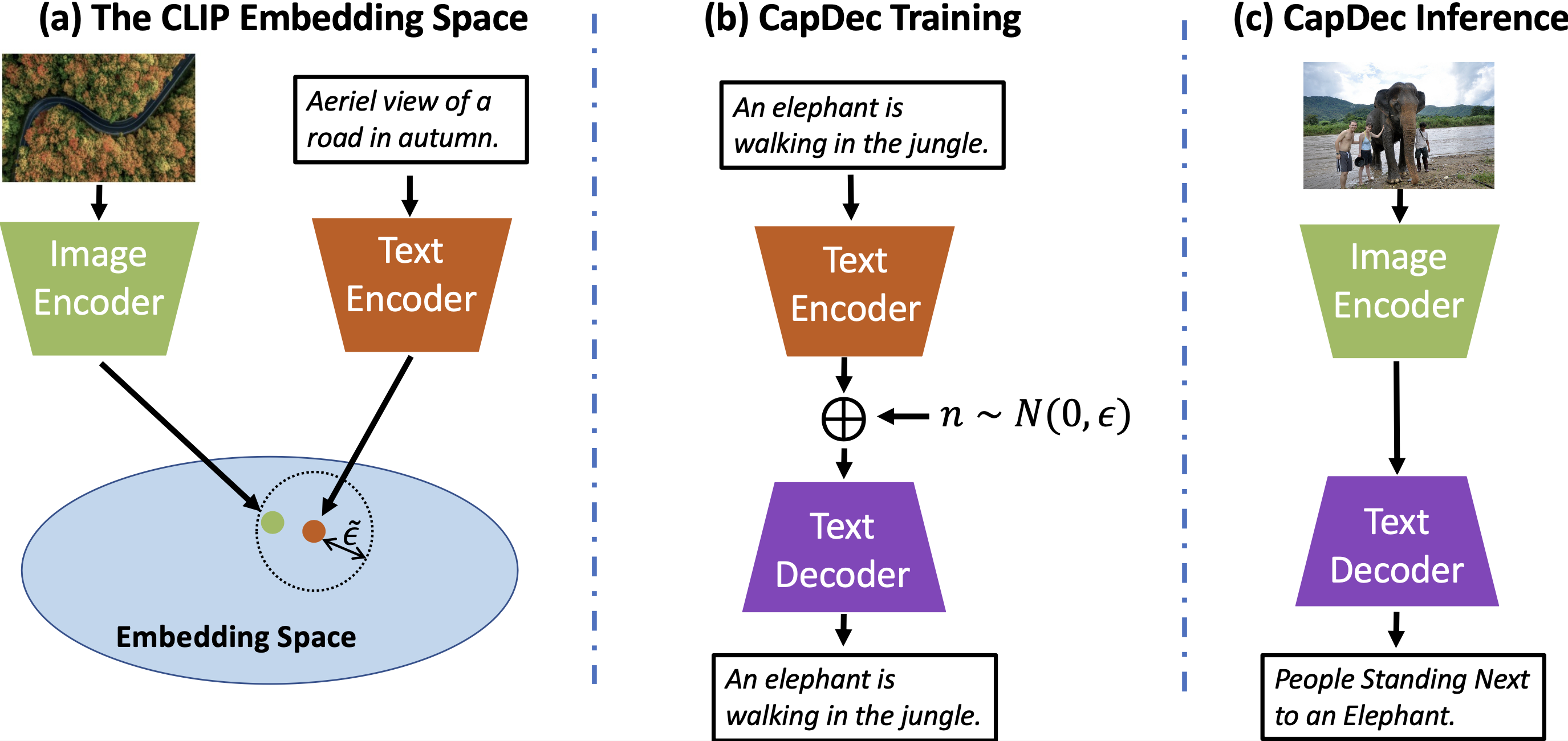

Sensors | Free Full-Text | Sleep CLIP: A Multimodal Sleep Staging Model Based on Sleep Signals and Sleep Staging Labels

Proposed approach of CLIP with Multi-headed attention/Transformer Encoder. | Download Scientific Diagram

How do I decide on a text template for CoOp:CLIP? | AI-SCHOLAR | AI: (Artificial Intelligence) Articles and technical information media

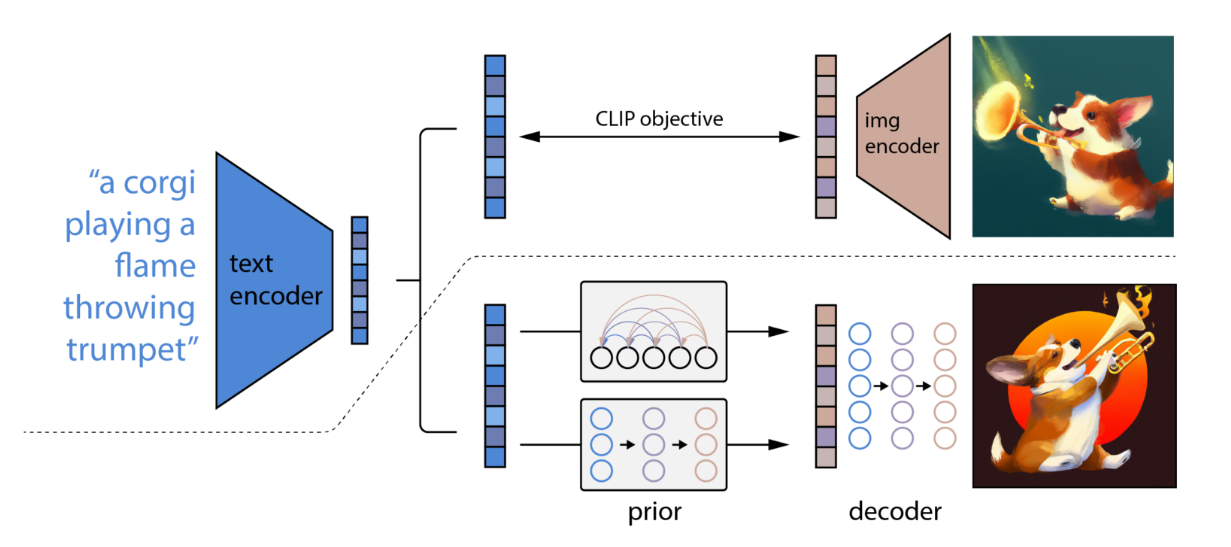

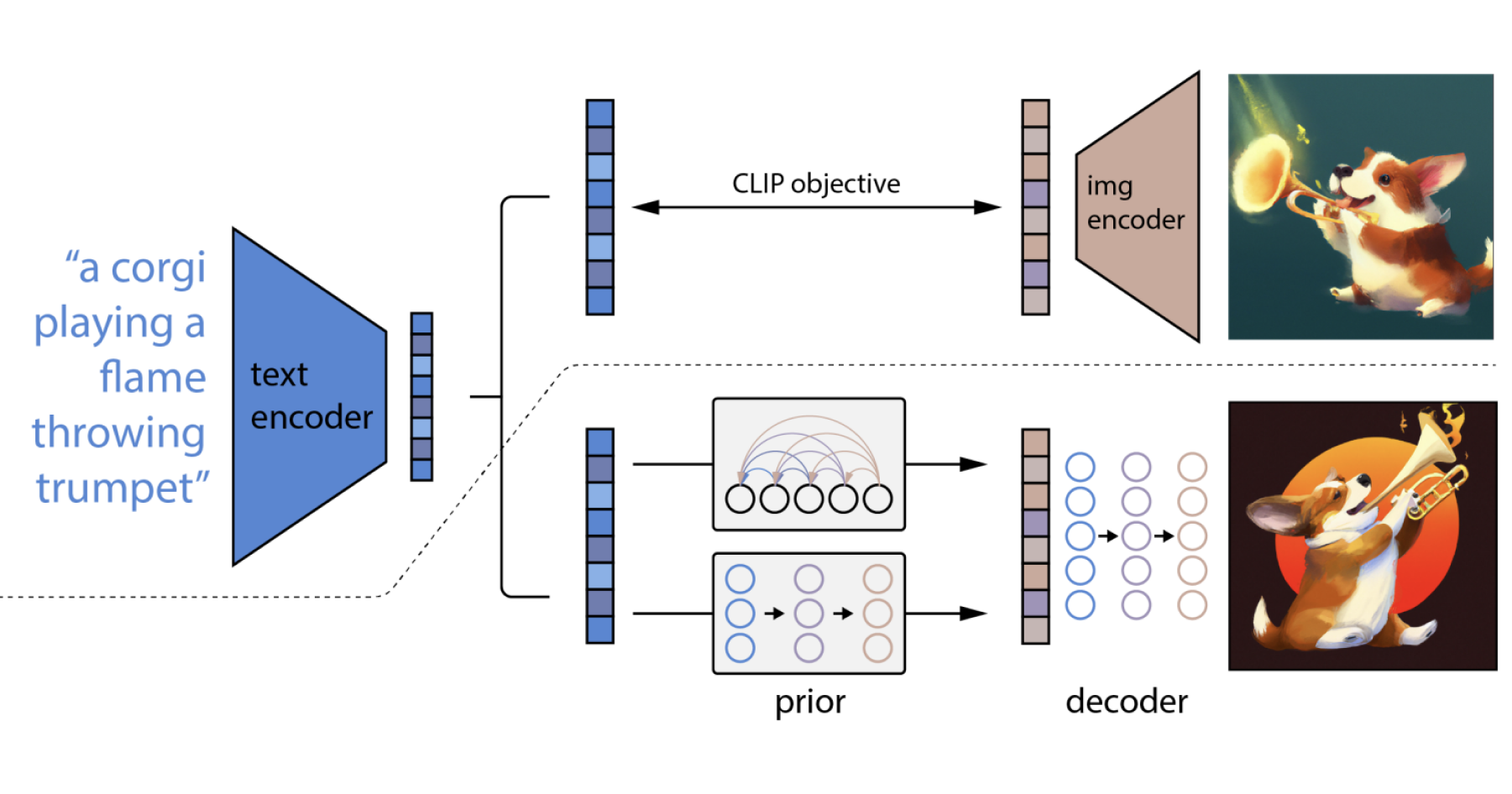

From DALL·E to Stable Diffusion: How Do Text-to-Image Generation Models Work? - Edge AI and Vision Alliance

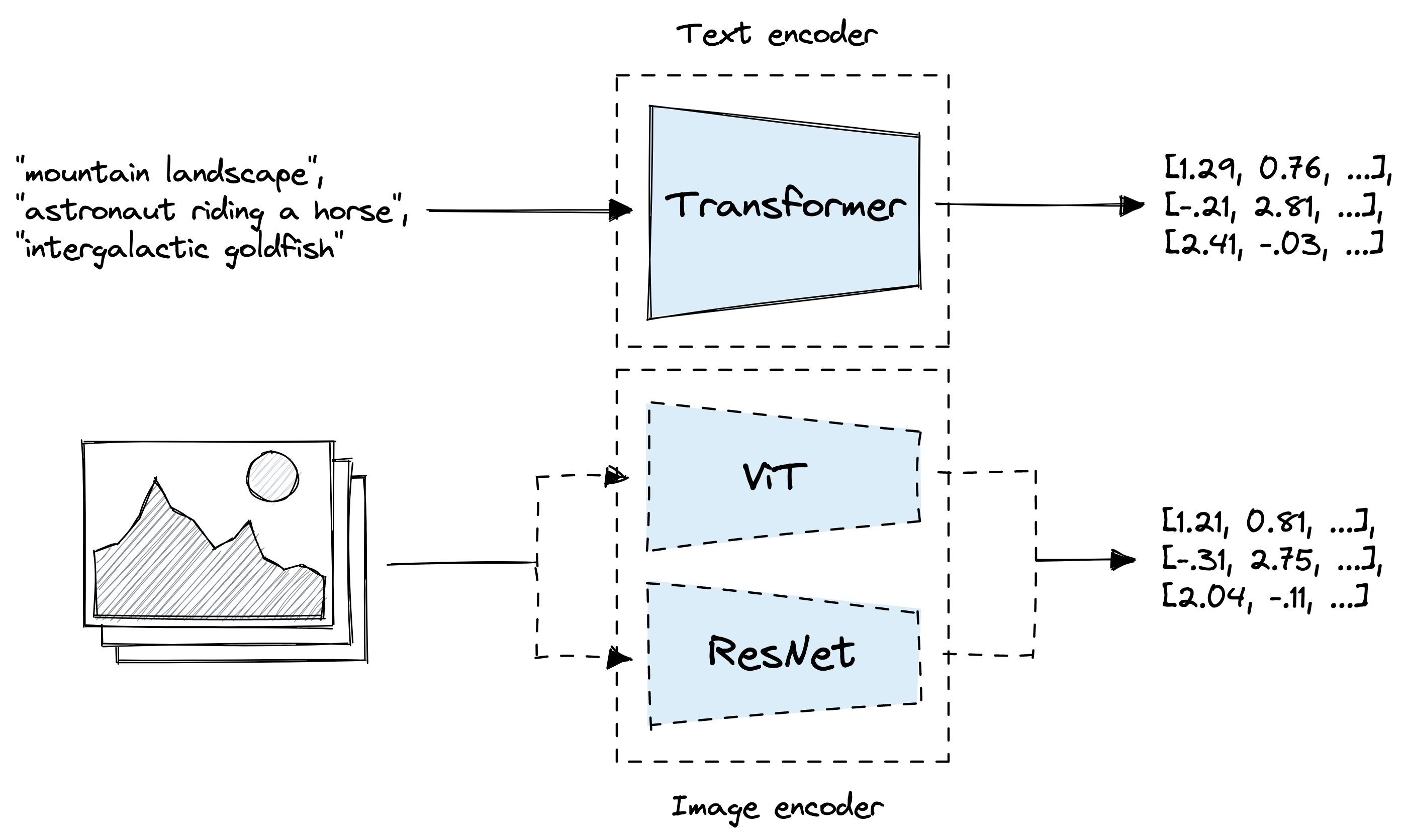

GitHub - jina-ai/executor-clip-encoder: Encoder that embeds documents using either the CLIP vision encoder or the CLIP text encoder, depending on the content type of the document.

Meet 'Chinese CLIP,' An Implementation of CLIP Pretrained on Large-Scale Chinese Datasets with Contrastive Learning - MarkTechPost